Artificial neural networks (ANNs) is one of the many methods used by AI, which is not new and has been there for decades. Artificial intelligence is claimed as the “second best” algorithms because it is usually applied when the best algorithms can not be found. The businesses do not use AI intensively because AI normally provides loose instead of precise answers and most businesses require exact answers, e.g., trading or not trading, instead of 80% chance of profiting if trading.

| However, many recent applications like autonomous driving, speech recognition, and computer vision do not have exact answers because of almost infinite cases to consider. AI then becomes an effective way to help these applications. |

|

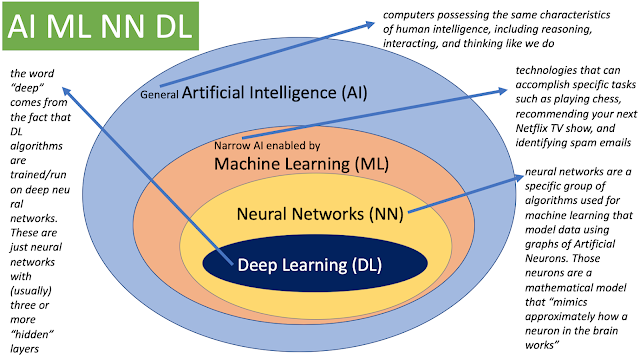

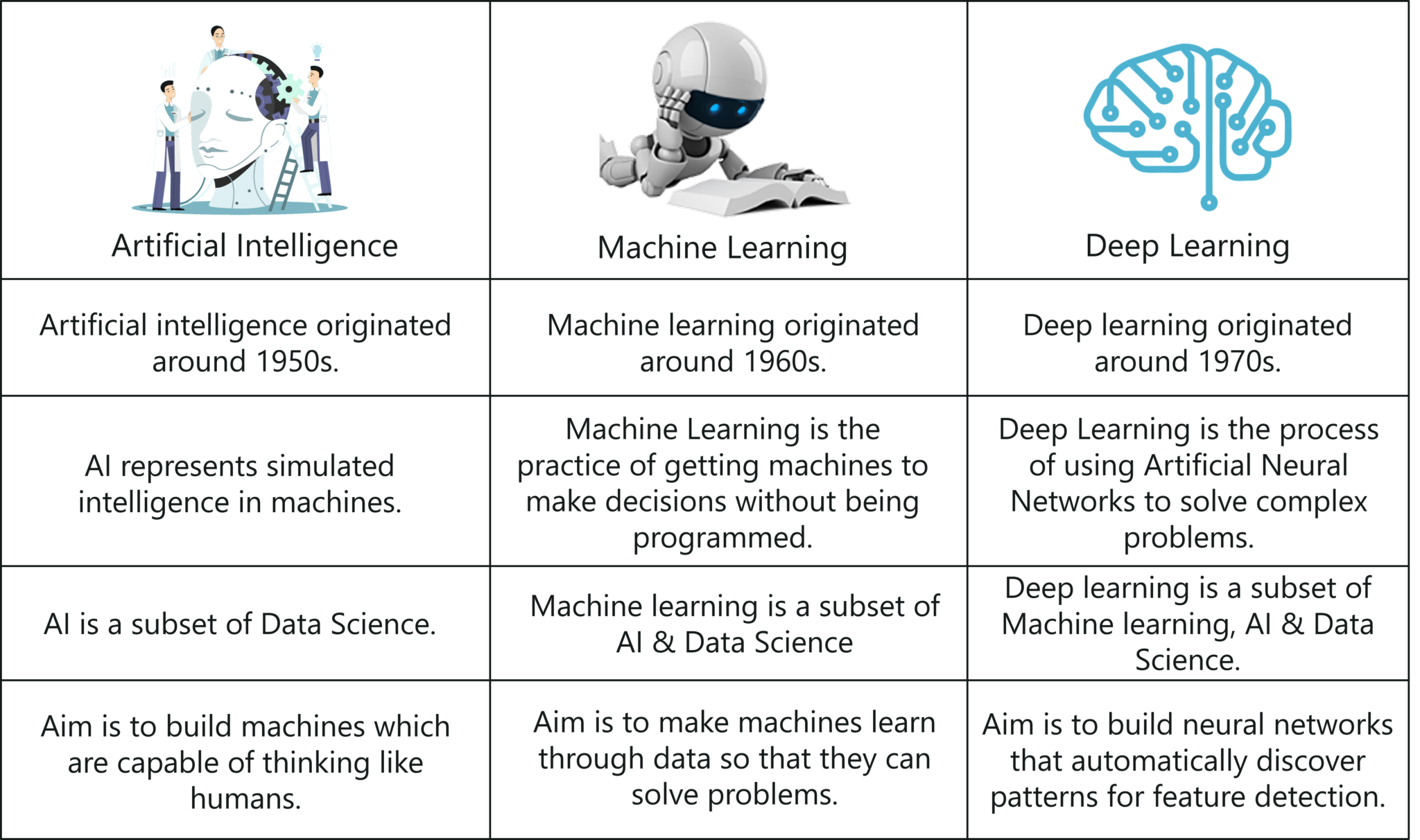

| The picture explains the differences among artificial intelligence (AI), machine learning (ML), and deep learning (DL), which is a kind of neural networks with many layers. |

|

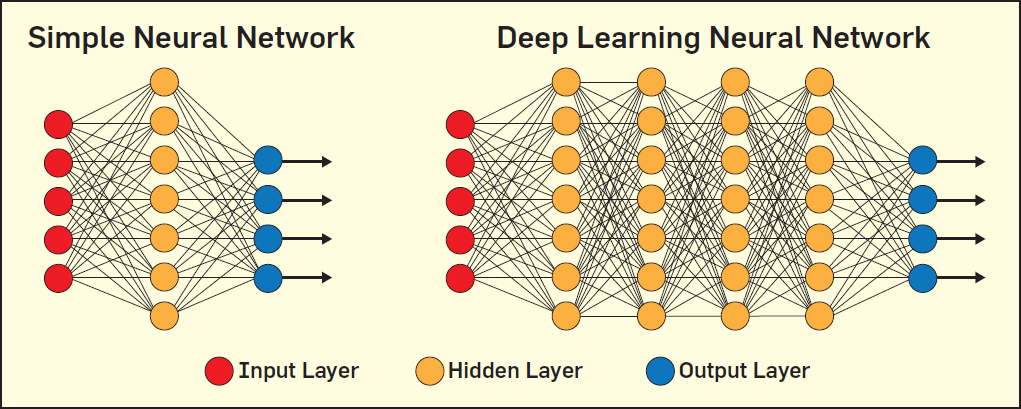

| Deep learning is a very trendy topic in these days and is based on artificial neural networks, which will be discussed in the following slides. |

|

|

I wanted to speak up during the meeting, but I held my peace (be silent). |