A World Wide Web Search Engine

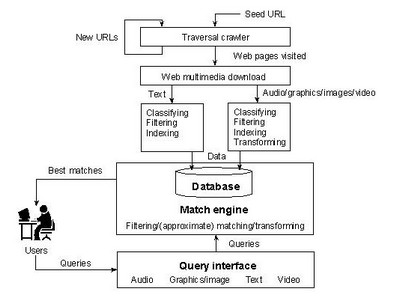

The first two programming exercises are related to the construction of a web search engine, which includes the following three components:

- Traversal crawlers,

- Indexing software, and

- Search and ranking software.

|

|

- Indexing software: Automatic indexing is the process of algorithmically examining information items to build a data structure that can be quickly searched.

- Search and ranking software: This software analyzes a query and compares it to the indexes to find and determine in which order to display the relevant pages.