Backpropagation is a supervised learning algorithm, for training artificial neural networks. The algorithm analyzes the training data and produces an inferred function, which can be used for mapping new examples.

| The training data consists of example pairs of an input object (typically a vector) and a desired output value (also called the supervisory signal). The learning algorithm has to generalize from the training data to unseen situations in a “reasonable” way. |

While designing a neural network, in the beginning, we initialize weights with some random values or any variable, but our model output may be way different than our actual output, i.e., the error value is huge. Therefore, we need to change the parameters (weights), such that error becomes minimum.

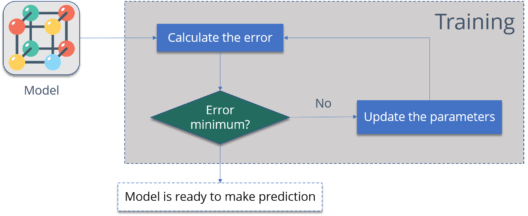

| Let’s put it in an another way, we need to train our model. One way to train our model is called backpropagation, which takes the following steps: |

|

- Calculate the error: How far is your output from the actual output?

- Minimize Error: Check whether the error is minimized or not.

- Update the parameters: If the error is huge then, update the parameters (weights and biases). After that again check the error. Repeat the process until the error becomes minimum.

- Model is ready to make a prediction: Once the error becomes minimum, feed some inputs to the model and it will produce the output.

|

Q: Why is 6 afraid of 7? Q: A: Because 7815. |